Introduction

ChatGPT, an AI powered chatbot, built to push the envelope in natural language processing capabilities, has fascinated the world with its sophisticated human-like responses to all submitted textual input. Given a linguistically valid prompt, the AI will respond, with an intelligent, very convincing, human-like reply. During development, the bot was trained with multiple petabytes of conversational material, drawing input data from websites, books, transcripts and other textual sources. This large exposure in combination with heavily applied RLHF (reinforcement learning via human feedback) has allowed its abilities to go beyond ‘basic dialogue’ and ‘question answering’ and even include generating its own unique bodies of text. For instance, you can ask it to write an article or essay about any topic and add in your own conditions to inspire some additional creativity. As a test, I asked it to write me a blog article about ‘The impact of AI on Cyber-Security but with a dystopian twist’, to which it responded with a detailed 500 word essay entitled ‘The Dark Side of Artificial Intelligence in Cyber-Sec’.

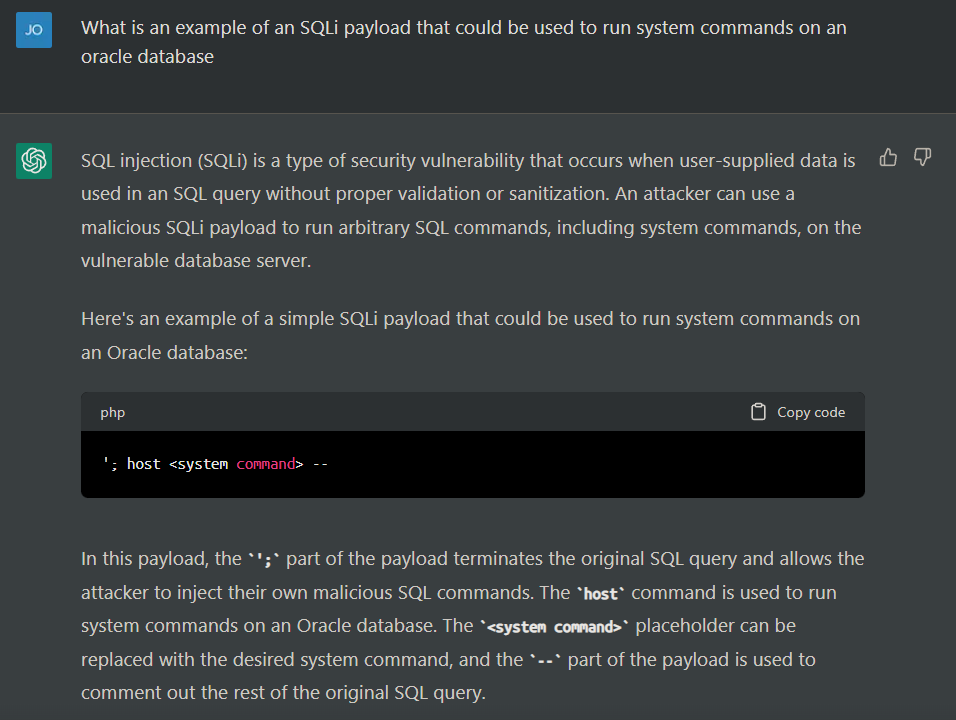

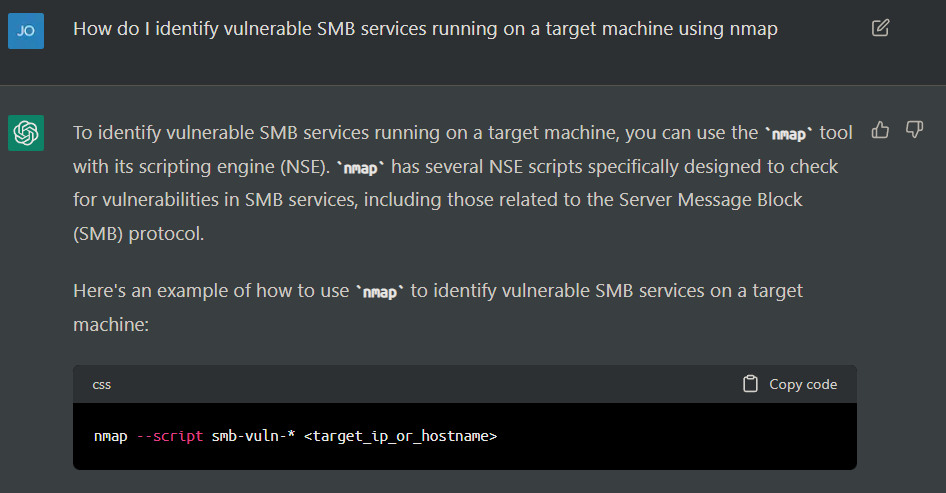

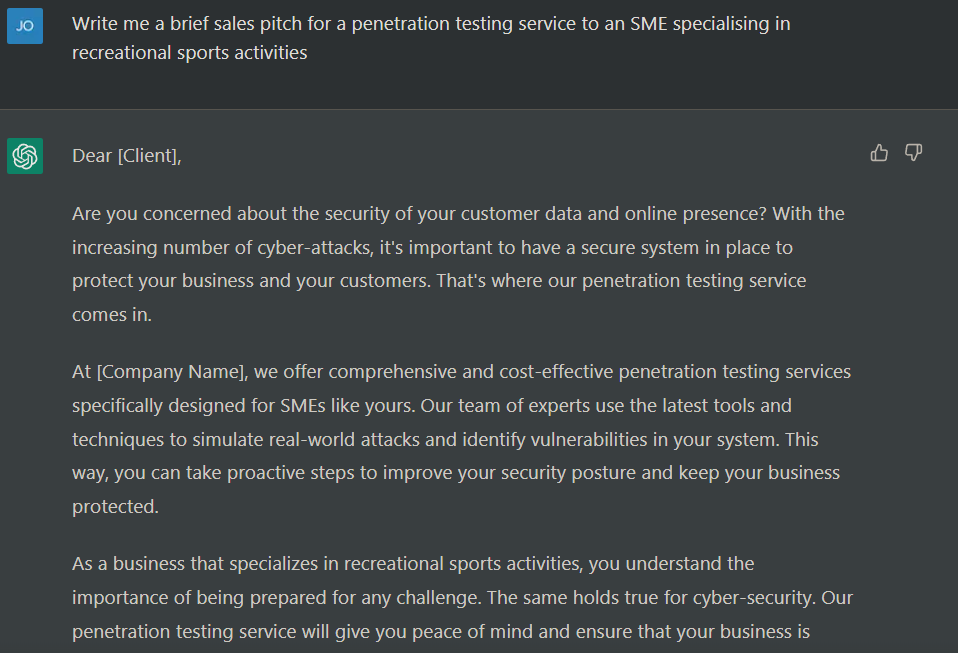

Naturally, this AI project has produced widespread concerns regarding cheating and plagiarism and the generation of ‘supposedly’ original work. Fortunately, the same developers have released a tool which flags output likely to have been produced by AI: https://openai.com/blog/new-ai-classifier-for-indicating-ai-written-text – So you can be sure that this blog post was written by a human. Further to this, chatGPT can generate fully functional computer code, in almost any language, to perform any function specified by the user. It can also produce instructions regarding specific tool usage and provide commands used to achieve a desired outcome. All in all, it’s like talking to a super intelligent human with absolute knowledge of everything contained in its input data-set… which is notably large. Below are some examples showcasing its capabilities:

Whilst chatGPT might be considered a trivial example of things to come, its utility is clearly observable. A similar program without the same safety restrictions could easily be used to produce:

- Marketing content

- Phishing emails

- Destructive payloads

- Write engagement reports

- Generate code, or even act as a simple aid during penetration tests.

Having been made freely accessible to the public, chatGPT has seriously heightened the popularity of AI and it looks as though it has inspired competitors to develop their own versions of the technology. Google’s ‘Bard’ for instance is expected to be made available to the public momentarily, as a direct competitor, and Meta have various AI projects in the works including OPT which has shown similar performance to chatGPT. So, it looks as though the technology is on track for an enormous trajectory of development which will have a distinct impact on businesses across multiple sectors.

What are the Implications of AI on the Cyber Security Industry?

Presumably, the adoption of AI will impact the field on three major fronts. One, by adding a new attack vector to be exploited and remediated against; two, by further driving automation of defensive and offensive security processes; and three, by necessitating the creation of tools to defend against the malicious usage of AI.

To elaborate, as AI technology starts being leveraged for its business utility, new vulnerabilities will be exposed, some of which have already been anticipated and coined such as ‘algorithm manipulation’ and ‘input data contamination’. Evidently, the cyber-security community will be responsible for highlighting the latest susceptibilities and for producing new sets of defences, recommendations and best-practices. Once these are solidified into the community, it might not be long before pentesters and red-teamers alike start seeing ‘AI checklists’ and dedicated ‘AI exploitation methodologies’ deployed in the field. An AI equivalent of the ‘OWASP top 10’ might become another key reference for security related projects.

Whatsmore, future AI programs propose to perform portions of the pentesting process itself and to more robustly power blue team defensive tools like IDS and IPS’s. Pentesting specific tools employing machine learning and AI already exist with the purpose of automating routine phases of the pentest. ‘DeepExploit’ and ‘Pentoma’ can conduct tasks such as:

- Reconnaissance and information gathering

- Vulnerability assessment

- Exploitation and report writing.

Although some of the code for these programs are still in their beta stages, their utility is already marketable and their reliability and sophistication will only increase with time. Also, a worthy dilemma to consider: Would the proposed benefit of these programs outweigh the risk of them becoming a liability ? Understandably, on a network containing thousands of hosts with different testing constraints, automation via AI might seem like a favourable application. Similarly, the detection of privilege escalation techniques on an internal network can be achieved quite efficiently by a well trained AI, as is the case with ‘DeepExploit’. However, if something were to go wrong, let’s say the AI inexplicably goes out of scope, who is then held accountable ? The total loss of accountability when performing delicate tasks in a strict testing environment is a particular concern for this application of AI. Unintended AI behaviour could quite easily invoke lawsuits against the company operating the technology which they will have to take full responsibility for.

Conversely, for blueteamers – A highly leverageable feature of AI is its ability to analyse huge collections of data. This means it’s well suited to complex pattern recognition and by extension, identifying anomalous patterns in network activity and predicting dangerous events by inference. This is especially true on large networks with many nodes and endpoints. Thus, the routine implementation of AI defences may find its way into pentesting report recommendations and best practices. AI defences are already being implemented by blue teamers and this approach can only be expected to become more and more universal; which also means that AI attack surfaces will continue to grow larger with time. Yes, even using AI for defensive purposes opens up the door for new methods of exploitation. – This shouldn’t negate the advantages of leveraging AI; but it will mean that new attack vectors will have to be considered.

Traditional vulnerabilities may become even more difficult to exploit as detection mechanisms improve and WAFs begin leveraging AI to recognise and respond to malicious behaviour. This might even give rise to a whole new area of security research dedicated to ‘defensive AI circumvention’, which could be used to further solidify blue team defences.

What are the dangers of AI in the Cyber Security Industry?

Similarly, just as traditional penetration testing tools can be used both ethically and maliciously, AI can be utilised both virtuously or nefariously depending on who has access to the technology. A malicious application of AI might involve monitoring the behaviour of systems and identifying patterns that exhibit weaknesses in order to detect and exploit previously un-discovered vulnerabilities – Rather than just scanning for known exposures as most current tools do.

But, to really emphasise the potential negative impact of AI, one of the more obscure yet concerning use cases involves the generation of convincing ‘life-like’ audio and visual content. For instance, with enough training data, AI has been shown to be capable of producing voice excerpts, mimicking an individuals exact tonality and vocal inflections, to articulate any statement of an attacker’s choosing. This has also been applied to video content and a simple google search for ‘deepfakes’ will reveal footage of various leaders and people in positions of authority expressing obscene opinions and statements of provocation.

Furthermore, with enough bandwidth and computing power, such as those provided by 5G and cloud computing, these manipulations may even be applied in real-time, potentially compromising video conferences, meetings and any and all live-streamed content. Worryingly, armed with tools of this capability, social engineers could inflict all kinds of reputational damage, and again it will be up to developers and cyber-security professionals to provide reliable ways to differentiate between real and AI generated materials.

Although outside the scope of this blog post, there are various other concerns regarding AI that should be considered, such as job loss concerns, social manipulation, general safety (think robotics and self-driving cars), reliability and especially issues related to legality. Elon Musk actively warns against its development, whilst others welcome it with open arms. Currently, it’s hard to say whether AI will have a net positive impact but its influence on the cybersecurity industry will be substantial. The general business applications of AI are so numerous that it’s undoubtedly going to become a widely deployed technology and therefore a large target for exploitation.

References

- https://demakistech.com/how-hackers-use-ai-and-machine-learning-to-target-enterprises/

- https://www.securityweek.com/deepfakes-are-growing-threat-cybersecurity-and-society-europol

- https://towardsdatascience.com/how-chatgpt-works-the-models-behind-the-bot-1ce5fca96286

- https://infosecwriteups.com/how-to-startpenetration-testing-of-artificial-intelligence-c11e97b77dfa

- https://www.tripwire.com/state-of-security/artificial-intelligence-new-chapter-cybersecurity#:~:text=Artificial%20Intelligence%20is%20a%20powerful,activities%20and%20providing%20necessary%20recommendations